A review article about the future of neuromorphic computing by a team of international researchers, including co-authored contributions by Dr Anand Subramoney, an expert from the Department of Computer Science at Royal Holloway, has been published in leading science journal, Nature, on 22 January 2025.

The article, titled ‘Neuromorphic Computing at Scale,’ examines the state of neuromorphic technology and presents a strategy for building large-scale neuromorphic systems.

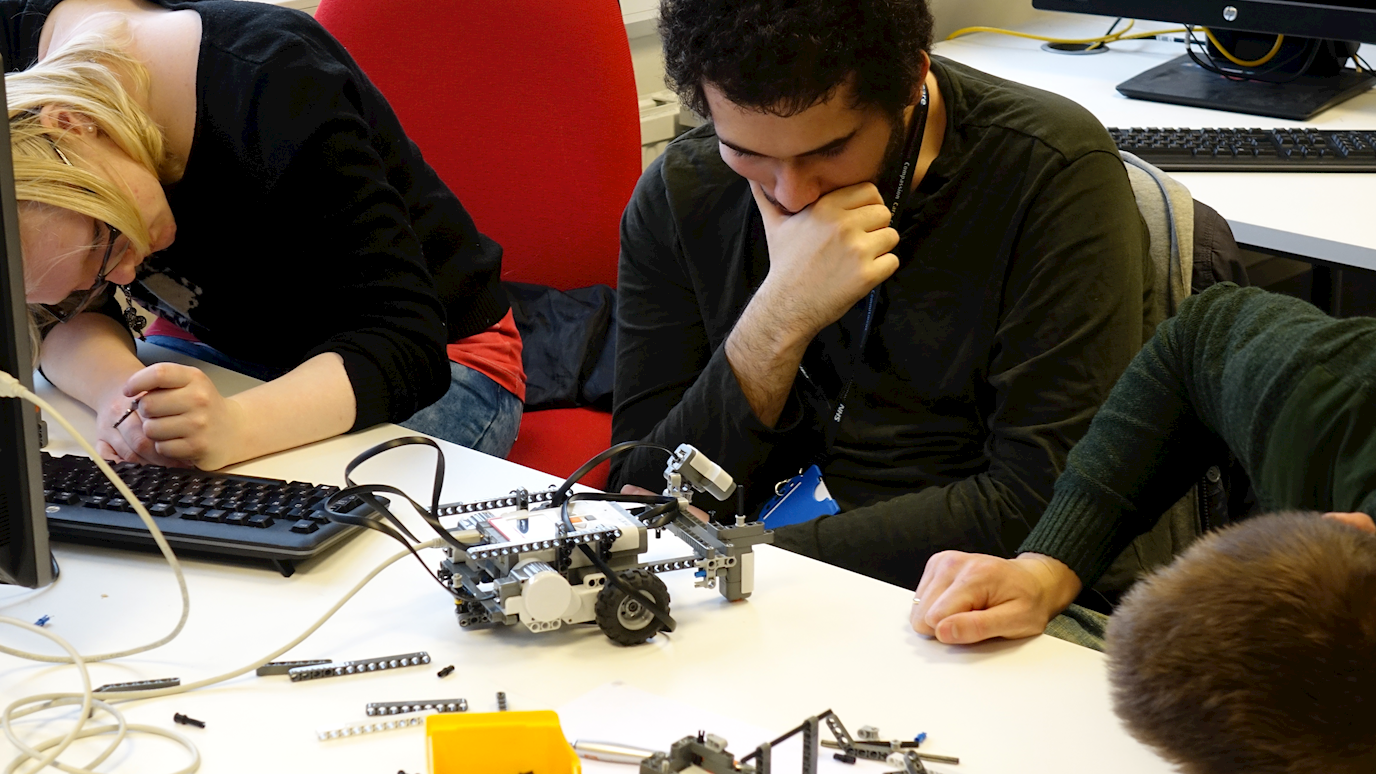

The research is part of a broader effort to advance neuromorphic computing, a field that applies principles of neuroscience to computing systems to mimic the brain’s function and structure. Neuromorphic chips have the potential to outpace traditional computers in energy and space efficiency as well as performance, presenting substantial advantages across various domains, including artificial intelligence, health care and robotics. As the electricity consumption of AI is projected to double by 2026, neuromorphic computing emerges as a promising solution.

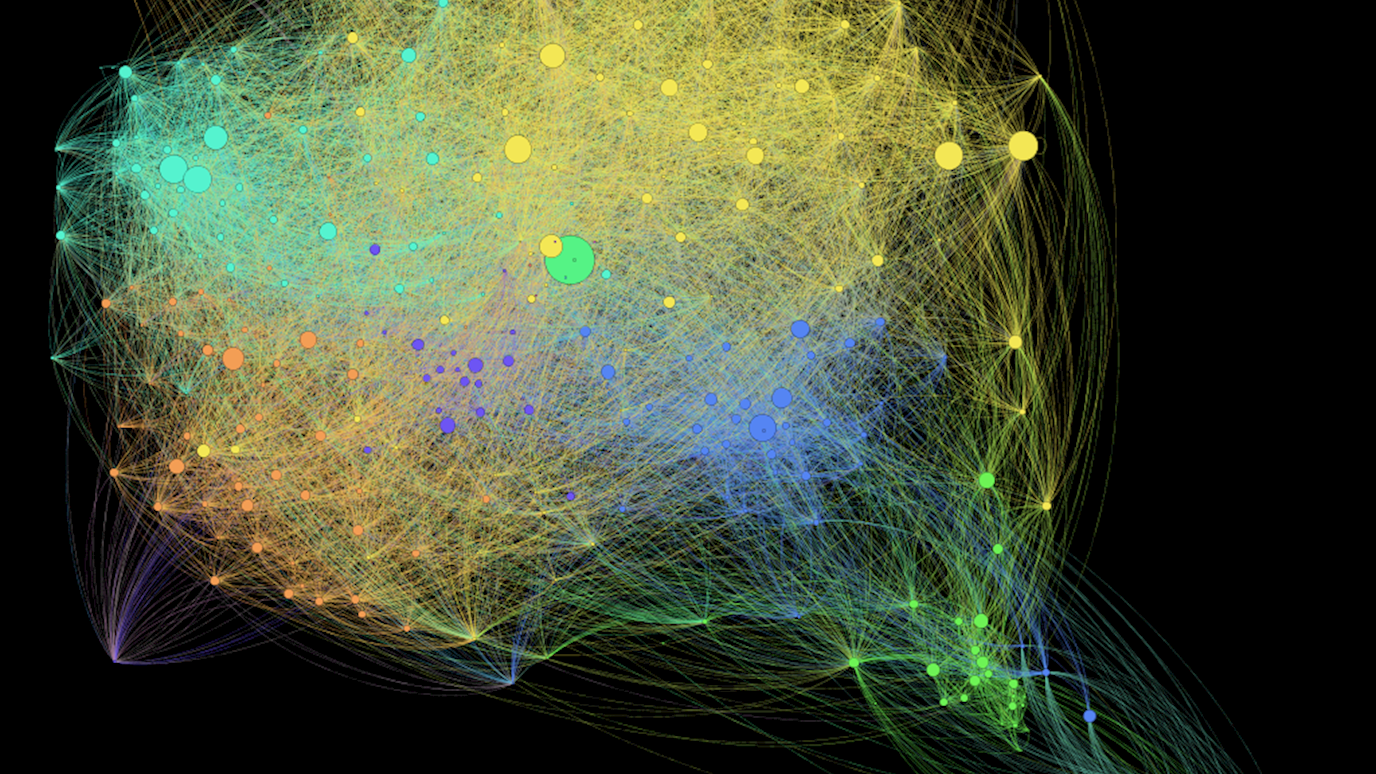

In the article, authors say that neuromorphic systems are reaching a “critical juncture,” with scale being a key metric to track the progress of the field. Neuromorphic systems are rapidly growing, with Intel's Hala Point already containing 1.15 billion neurons. The authors argue that these systems will still need to grow considerably larger to tackle highly complex, real-world challenges.

Lead author Dhireesha Kudithipudi of UTSA, Texas and founding director of MATRIX: The UTSA AI Consortium for Human Well-Being, said,

“Neuromorphic computing is at a pivotal moment, reminiscent of the AlexNet-like moment for deep learning. We are now at a point where there is a tremendous opportunity to build new architectures and open frameworks that can be deployed in commercial applications. I strongly believe that fostering tight collaboration between industry and academia is the key to shaping the future of this field. This collaboration is reflected in our team of co-authors.”

To achieve scale in neuromorphic computing, the team proposes several key features that must be optimised, including sparsity, a defining feature of the human brain. The brain develops by forming numerous neural connections (densification) before selectively pruning most of them. This strategy optimises spatial efficiency while retaining information at high fidelity. If successfully emulated, this feature could enable neuromorphic systems that are significantly more energy-efficient and compact.

Co author Dr Anand Subramoney, from Royal Holloway, whose research focuses on scaling up neuromorphic algorithms by designing them from first principles to be scalable both algorithmically and implementation wise, commented,

“This research explores the perspective that to be able to solve challenging real-world tasks, the community needs to be goal oriented about algorithmic design to achieve scalability. This means that the community must move away from the traditional perspective of trying to model biology in detail even though the goal is to develop AI algorithms. That is, we want to have algorithms that are inspired by neuroscientific data without being constrained by it.”

“Neuromorphic computing is a branch of computing that attempts to develop AI algorithms and hardware that are inspired by the brain and 10-1000 times more energy efficient than current standard hardware. While there has been a lot of work done in this field, it hasn’t reached a point where it’s able to scale up to the same extent that current large language models (LLMs) such as ChatGPT are able to. This perspective paper describes how and which existing works can be leveraged and what the neuromorphic community must focus on if they want to successfully scale neuromorphic AI algorithms to reach a point where they can start to solve challenging real-world problems.”

Steve Furber, emeritus professor of computer engineering at the University of Manchester, is also among the authors on the project. Furber specialises in neural systems engineering and asynchronous systems, and led the development of the million-core SpiNNaker1 neuromorphic computing platform at Manchester and co-developed SpiNNaker2 with TU Dresden.

"Twenty years after the launch of the SpiNNaker project, it seems that the time for neuromorphic technology has finally come, and not just for brain modeling, but also for wider AI applications, notably to address the unsustainable energy demands of large, dense AI models,” said Furber. “This paper captures the state of neuromorphic technology at this key point in its development, as it is poised to emerge into full-scale commercial use."

The international collaboration in Nature towards the future in this field involved a team of researchers and authors from leading institutions, national laboratories and industry partners including Google DeepMind, Intel Labs, Sandia National Laboratories, the National Institute of Standards and Technology and others.

Read the full review paper in Nature here.