The Audio, Biosignals and Machine Learning Group aims to deepen our understanding of human voice communication and sound transmission and well as biomedical signals for diagnostic and therapeutic applications in active human health monitoring.

Crucial to human existence is our ability to communicate by voice (speech and singing) and furthering understand of the relevant processes enhances knowledge of the bioacoustics processes involved and provides a route to improving analysis and communication systems in the future.

Our interest in bio-engineering is not limited to the human voice. Making sense of biomedical signals for healthcare ranging from electrocardiogram and to more complex electroencephalogram figures prominently amongst our research activities. Artificial neural networks in the form of deep learning as well as advanced signal processing methods enable us to provide new technological tools for healthcare.

Our bio-inspired endeavour also brings physicality to our modelling, as this is a crucial aspect of engineering. We are currently investigating how advanced Systems on Chip platforms (such as MPSoC architectures) can assist in modelling and implementation of olfactory signal processing and recognition. Sensors, signal acquisition & processing and ML algorithms work hand in hand with reconfigurable software-hardware frameworks to create systems capable to identify various VOCs or implement air pollution analysis techniques.

Research areas in Voice&Audio

Voice development in singing

Singing is an activity that many indulge in and find deeply rewarding for a number of reasons. We are looking at voice development and voice change in girls (Professor Graham Welch, Dr Evangelos Himonides and I are recording girl choristers at Wells Cathedral on a regular 6-monthly basis) are making and boys (Professor Martin Ashley and I) who are and are not cathedral chorister with a view to improving understanding of the nature of the normal and pathological growing human voice.

Contact supervisor David Howard

Tuning in choral singing

How a choir tunes the notes of chords is basic to improving the overall sound, blend and listening pleasure for the audience and choir alike. Most important is the relationship between tuning and perceived consonance, which is heightened when the individual notes of chords are tuned in just temperament as opposed to equal temperament. On the face of it, this sounds like something that is easy to measure by tracking the fundamental frequency of the voice. However, there are other aspects that need to be considered that affect our perception of pitch and our work is looking at these. We have worked with a quartet from the auditioned Royal Holloway Chapel Choir.

Contact supervisor David Howard

Synthesis of speech

Speech synthesis is commonplace in many systems where a message needs to be communicated, but the synthetic output although highly intelligible, is rarely if ever mistaken as being from a human source. Understanding better what the acoustic cues in speech are that cue naturalness (as opposed to intelligibility) is the driver for this work, which is looking at the true 3-D shapes of the human vocal tract and how to synthesise speech from them. Work with the University of York (Department of Archeology) and Leeds Museum is looking at a historical vocal tract of an Egyptian Mummy.

Contact supervisor David Howard

The Vocal Tract Organ

This work is an offshoot from the synthesis using 3-D vocal tracts, and it makes use of 3-D prints of vowels that are placed atop loudspeaker drivers driven by a synthetic larynx source waveform whose pitch and amplitude can be varied. The organ can be played via a standard music keyboard or via joysticks. Performance with the organ have included a flash-mob opera after dinner events for the Royal Academy of Engineering, science fairs and public engagement sessions. The reason for this work is to investigate the organ's potential as a new musical instrument, including as a modern Vox Humana pipe organ stop with Harrison and Harrison Organ Builders in Durham (existing Vox Humana stops sound most unlike the human voice!).

Contact supervisor David Howard

Speech production and Parkinson's disease

The change in muscular control with the onset of Parkinson's disease also manifests itself in speech production and it is possible that this is earlier than limp tremor. We are working with Dr Steve Smith from the University of York (Department of Electronics) to explore the speech of patients with Parkinson's disease to explore whether their speech production with a view to assessing whether or not onset might be detected earlier.

Contact supervisor David Howard

Hearing modelling

A crucial part of all audio work is understanding better how the human hearing system works, which encompasses psychoacoustics, hearing physiology, neural carriage of information and high-level signal processing. Whilst much of this is impossible to measure directly, listening experiments can provide significant useful information that can be used to improve analysis systems.

Contact supervisor David Howard

Biomedical Signal De-noising

With the increasing use of technology in medicine and the use of devices that acquire signals from the human body, there is a need to handle this data carefully. There are many biomedical signals of interest including, for example, Electro-Cardiograph (ECG), Electro-Encephalograph (EEG), Electro-Myograph (EMG) and Electro-Laryngograph (ELG) signals to name a few. All of these signals originate from the human body and suffer from interference and degradation during the acquisition phase. Typically these signals will suffer from baseline wander due to motion of the body, from mains line interference and often stray EMI in the form of spikes. Furthermore, these small electrical signals often interfere with one another. One of our research themes is the use of advanced signal processing techniques for the separation of these signals of interest from each other and from unwanted sources of noise.

Contact Supervisor Clive Cheong Took

Non-invasive cardiovascular disease risk prediction

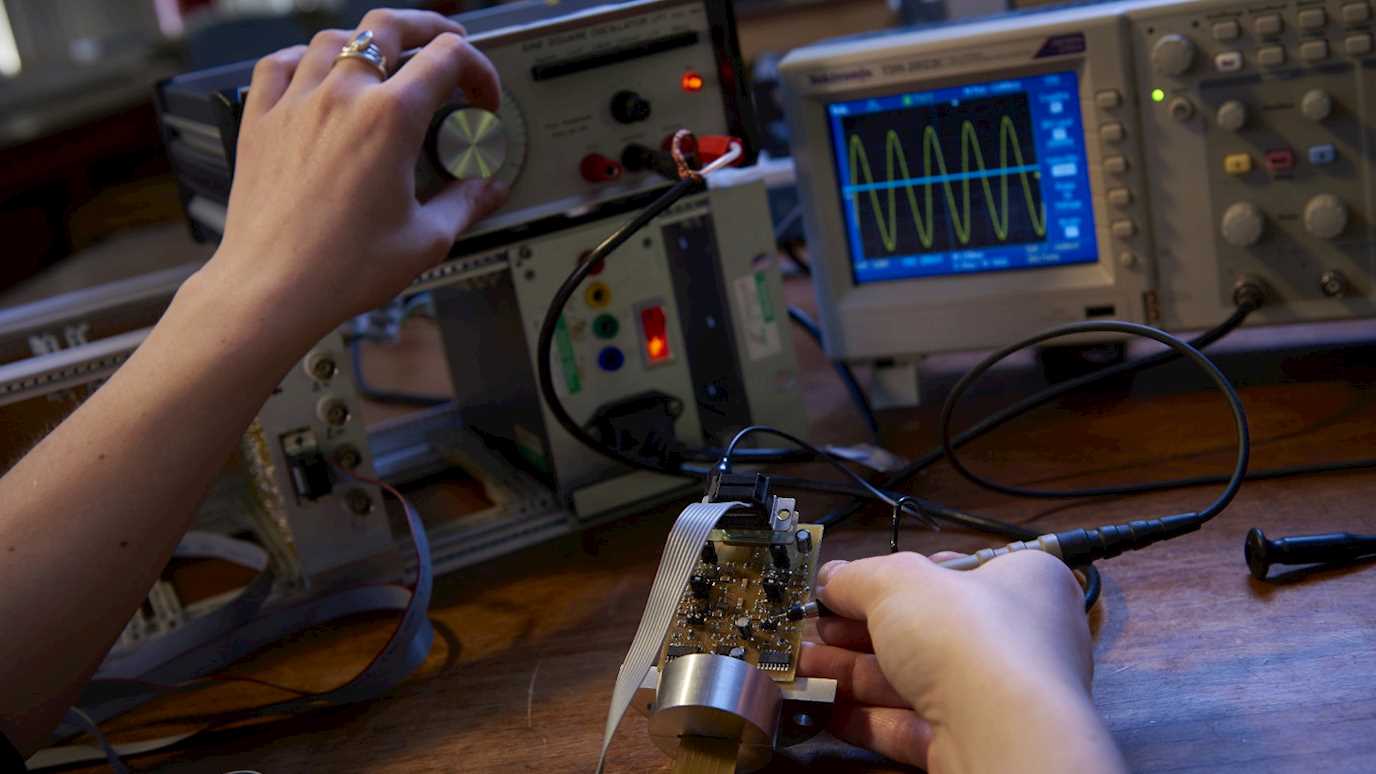

Cardiovascular disease (CVD) is currently the primary cause of mortality in the world. Often, CVD goes undetected until complications appear and many sufferers go unchecked as insufficient resources are available for regular screening. Therefore, the early detection of the onset of CVD is vital for effective prevention and therapy. This research project proposes that the technique of Photoplethysmography (PPG) could be the basis for an inexpensive and effective method to assess cardiovascular disease risk. PPG is a non-invasive technique to measure cardiovascular health by sensing the change in blood volume in the finger pulp while the heart is pumping. A PPG sensor consists of an infrared LED that transmits an IR signal through the fingertip of the subject, a part of which is reflected by the blood cells. The changing blood volume with heartbeat results in a train of pulses at the output; this is often called the Digital Volume Pulse (DVP) waveform. It is envisaged that such a device could be connected to an embedded microprocessor via an analogue-to-digital converter for signal processing and analysis. A proof of concept of this technique for using the DVP waveform to assess cardiovascular fitness exists already and will form the basis of this research project. This research project will endeavor to further this work with original research into improved sensing and signal processing techniques to increase the sensitivity and specificity of the test.

Contact Supervisor Clive Cheong Took

To apply for an MSc by Research or PhD within the Voice and Audio Group, please email the Head of Group, Professor Howard at david.howard@royalholloway.ac.uk with details about your research interests as well as a summary of your academic qualifications (a brief one to two page CV will be useful) and if you are self-funded or require funding. After you have liaised with Professor Howard, you will be able to register an account on Royal Holloway Direct to formally submit your application.

More details on the application process can be found here.

For more information on postdoctoral, fellowship and visiting opportunities, please email the Head of Group, Professor Howard at david.howard@royalholloway.ac.uk with your CV and a brief statement of your research interest and career aspirations.

Deep Learning for brain signals

Deep neural networks (DNNs) are one of the most popular and powerful machine learning systems used by companies such as Facebook and Google. It is therefore not a surprise that their successful applications have been in activities relevant to the cyber-world such as automated captioning of You Tube videos and facial recognition in Facebook images. However, there is a lack of applications in exploiting the deep learning of neural networks for the analysis of biomedical data. In this direction, we have investigated how to reconstruct signals generated deep inside the brain from non-invasive measurements taken from the scalp. This is useful, as this circumvents the need to perform brain surgery to take a closer “picture” of the brain activities and can found applications in rehabilitation such as the prognosis of patients suffering from epilepsy. These brain signals called electroencephalogram (EEG) can also be analysed for other purposes such as the prediction of a person’s movement, the analysis/prognosis of diseases such as Parkinson’s disease and Schizophrenia, and monitoring of mental fatigue. Project supervisor Dr Cheong Took. Project reference: CT1

New Methods and Theories for 3D and 4D Signal Processing

Traditional signal processing and machine learning applications rely on the use of learning methods in the real- and complex-valued domains. However, modern technologies have fueled an ever-increasing number of emerging applications in which signals relies on unconventional algebraic structures (e.g., non-commutative). In this context, advanced complex- and hypercomplex-valued signal processing encompasses many of these challenging areas. In the complex domain, the augmented statistics have been found to be very effective in different methods of machine learning and nonlinear signal processing. However, processing signals in hypercomplex domains enables us to exploit some different properties, albeit raising challenges in designing and implementing new and more effective learning algorithms. More generally, learning in the hypercomplex domain allows us to process multidimensional data as a single entity rather than modelling as a multichannel entity, hence preserving the integrity of the data. In that direction, quaternions have attracted attention in the signal processing and machine learning communities for their capability of dealing with 3D and 4D models, thus providing an exciting area to propose new methodologies in signal processing. Project supervisor Dr Cheong Took. Project reference: CT2

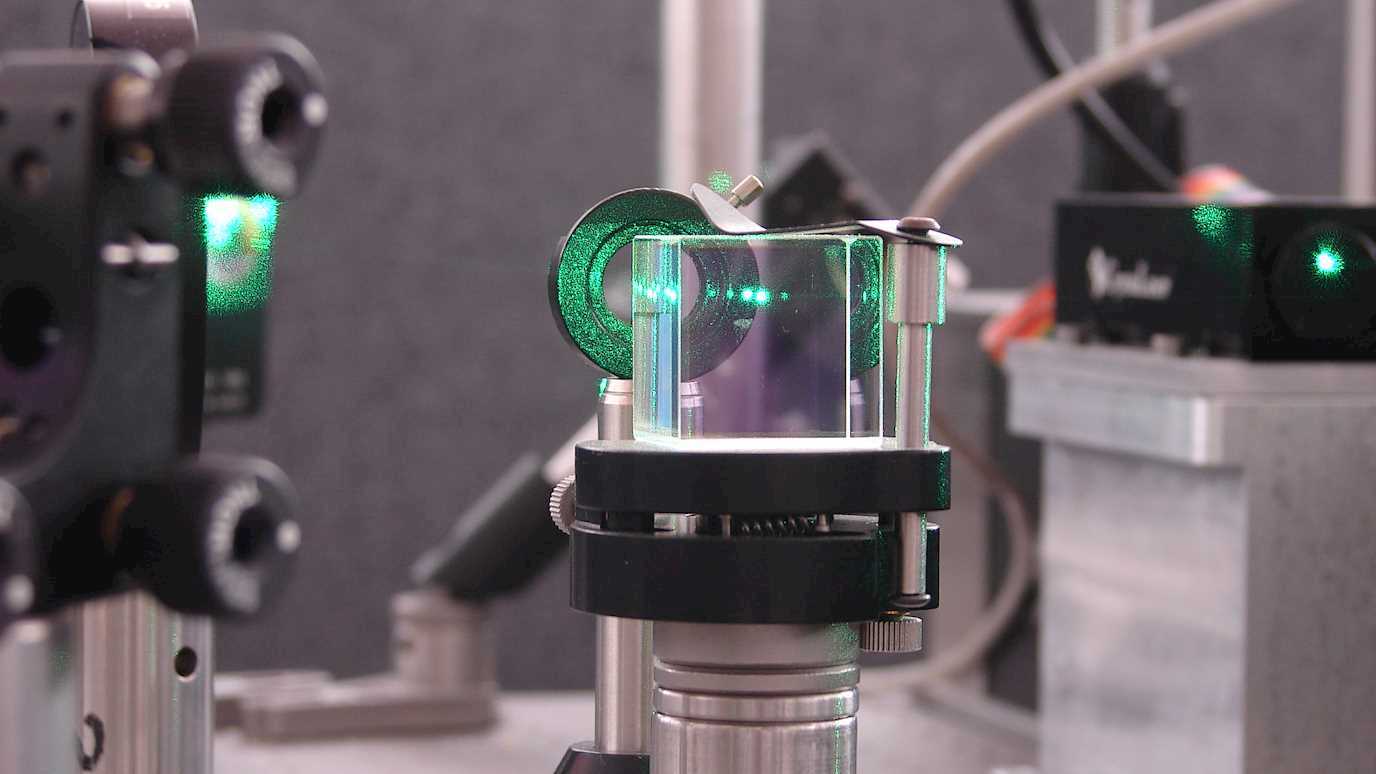

Neuromorphic MPSoC for Olfaction

The recent breakthrough in designing customized neuromorphic chips capable to compute complex neural algorithms and the better understanding of the biological olfactory mechanisms offer the perfect starting point for investigating new neuromorphic algorithms for reconfigurable software-hardware heterogenous platforms with applications in artificial olfaction. The research will explore computational models capable to deploy many parallel computing cores in low cost heterogeneous multiprocessor system on chip platforms and cope with the smell recognition challenges that arise in the very short transitional olfaction signals measurements done in noisy environmental conditions. The project seeks to achieve ultra-rapid olfaction measurements with possible applications in health monitoring and 3D plume odorant mapping. Project supervisor Dr Alin Tisan. Project reference: AT1

Autonomous non-invasive indoor abnormalities detector for elderly occupants

The increasing of elderly population requires a rise in the provision of specialised support services. This provision is putting existing resources under strain as the support requirements for the elderly grow. The dramatic change in human interaction and behaviour triggered by the recent pandemic outbreak has imposed general social distancing, forcing seniors to stay in their own homes whenever possible. The research will explore the development of an end-to-end system, based on a distributed computing paradigm for non-invasive detection of abnormal ambient phenomena (characterised by acoustic, olfaction and electromagnetic signals) to provide an effective care monitoring and decision-making service for domestic environments. Project supervisor Dr Alin Tisan. Project reference: AT2